Track Large Language Models¶

When it comes to building applications using Large Language Models, a lot of time is spent working on prompt engineering rather than training models. This new workflow requires a different set of tools that Comet is developing under the umbrella of LLMOps.

The tools available for Large Language Models fall under two categories:

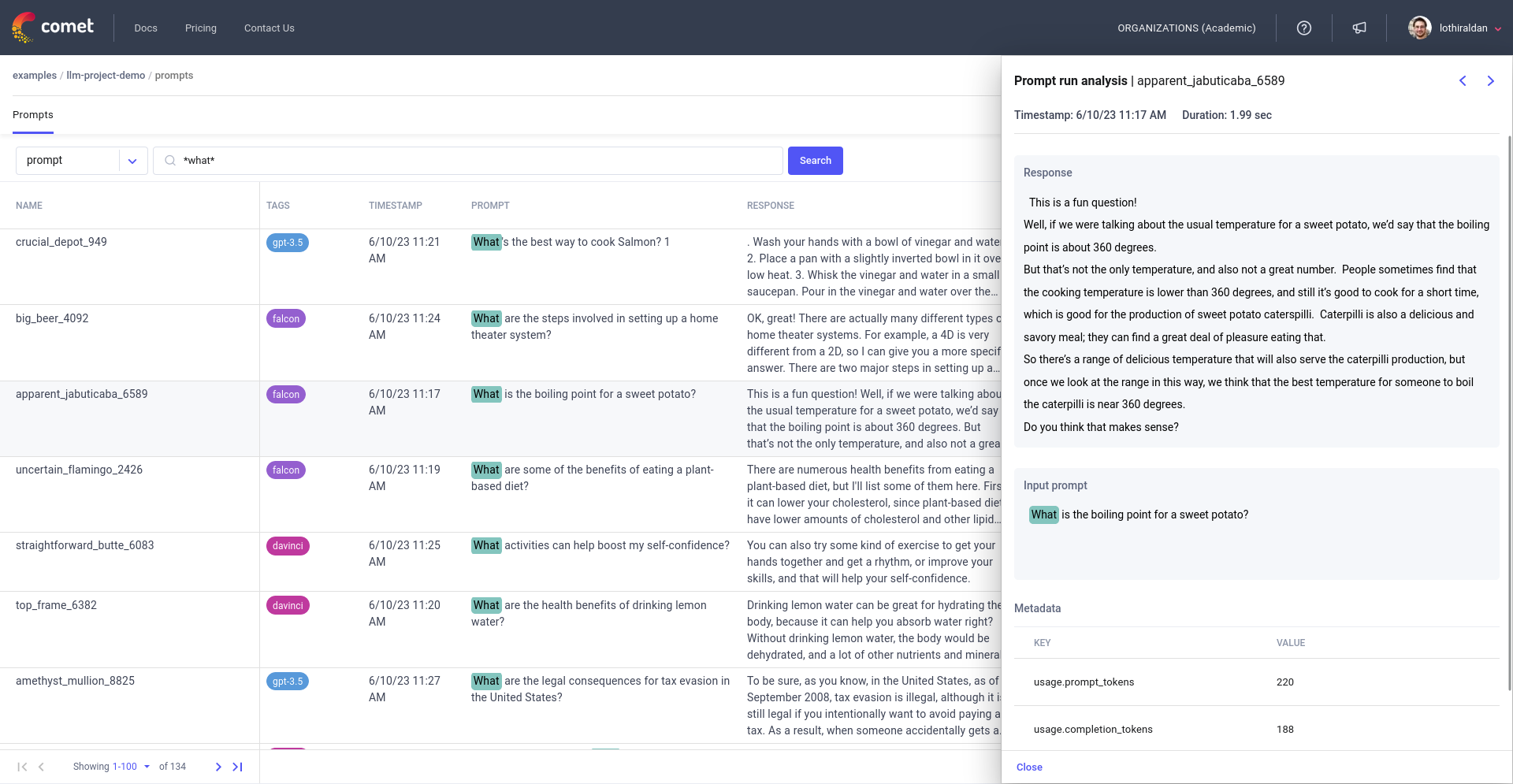

- LLM projects: Dedicated views specifically for analyzing prompts, responses and chaings. The functionality has been specifically built to allow you to analyze tens of thousands of prompts.

- LLM panels: Visualizations that can be used with Experiment Management to view prompts and chains, usefull when projects contain both fine-tuning and prompt engineering use-cases.

LLM Projects¶

LLM Projects differ from standard Experiment Management projects in that they are customized to the prompt engineering workflow rather than a fine-tuning or model training workflow.

Creating an LLM project can be done in one of two ways:

- Through the Comet UI by using the

New Projectbutton and choosingLarge Language Modelas the project type. - By using the LLM Python SDK to log a prompt to a new project.

Once the LLM project has been created, it can be accessed from the Projects page and gives you access to a number of prompt analysis tools.

Learn more about LLM projects here.

LLM Projects with Comet LLM SDK¶

Comet provides you with a specialized Python package for your LLMOps called comet-llm.

Discover all about installing and using this package from the LLM SDK reference docs.

LLM Projects with Comet UI¶

The Comet UI lets you analyze and manage LLM projects, with all associated logged prompts and chains, within user-friendly interfaces that you can easily share with your team members.

Discover all the functionalities of the Comet UI for LLM project management from the Comet UI docs.

Example LLM projects¶

A demo LLM project is available here: LLM demo project