Issue 15: Tesla’s ‘AI Day,’ AI Story Generation, Model Monitoring Tips

Tesla doubles down on becoming an AI leader, ML research’s struggles with reproducibility, a deep dive into automated story generation, and more

Welcome to issue #15 of The Comet Newsletter!

This week, we cover Tesla’s AI Day, which included a go-go dancer acting as a humanoid robot, as well as a deep dive into the history and current state of AI narrative generation.

Additionally, we highlight some excellent tips for monitoring models in production and ML research’s struggles with a core scientific principle.

Like what you’re reading? Subscribe here.

And be sure to follow us on Twitter and LinkedIn — drop us a note if you have something we should cover in an upcoming issue!

Happy Reading,

Austin

Head of Community, Comet

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

Tesla hosts “AI Day” to tout company’s AI capabilities

When we think of Tesla and AI, we might be forgiven for thinking exclusively of the camera-based computer vision systems that constitute their autonomous vehicle projects. But in an effort to rebrand Tesla’s AI capabilities as “deep AI activity in hardware on the inference level and on the training level”, the company recently hosted an all-day event focused explicitly on these capabilities.

Rebecca Bellan’s and Aria Alamalhodaei’s excellent recap in TechCrunch focused on a few specific takeaways from the event—Tesla’s AI-dedicated hardware, problems they’re working to solve with computer vision (i.e. data generation and management), and—perhaps most strangely—a humanoid robot that actually turned out to be a professional dancer in a prototype robot suit.

Whether or not Tesla is actually able to build a fully humanoid robot of sci-fi lore, one thing is clear—Tesla is doubling down on becoming a leading AI company, not just one that uses it in their flagship products.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

ML Model Monitoring—9 Tips From the Trenches

In recent years, the accessibility of machine learning model development has rapidly expanded as a result of easier-to-use training frameworks, low/no-code platforms, pretrained models, free compute resources, and more.

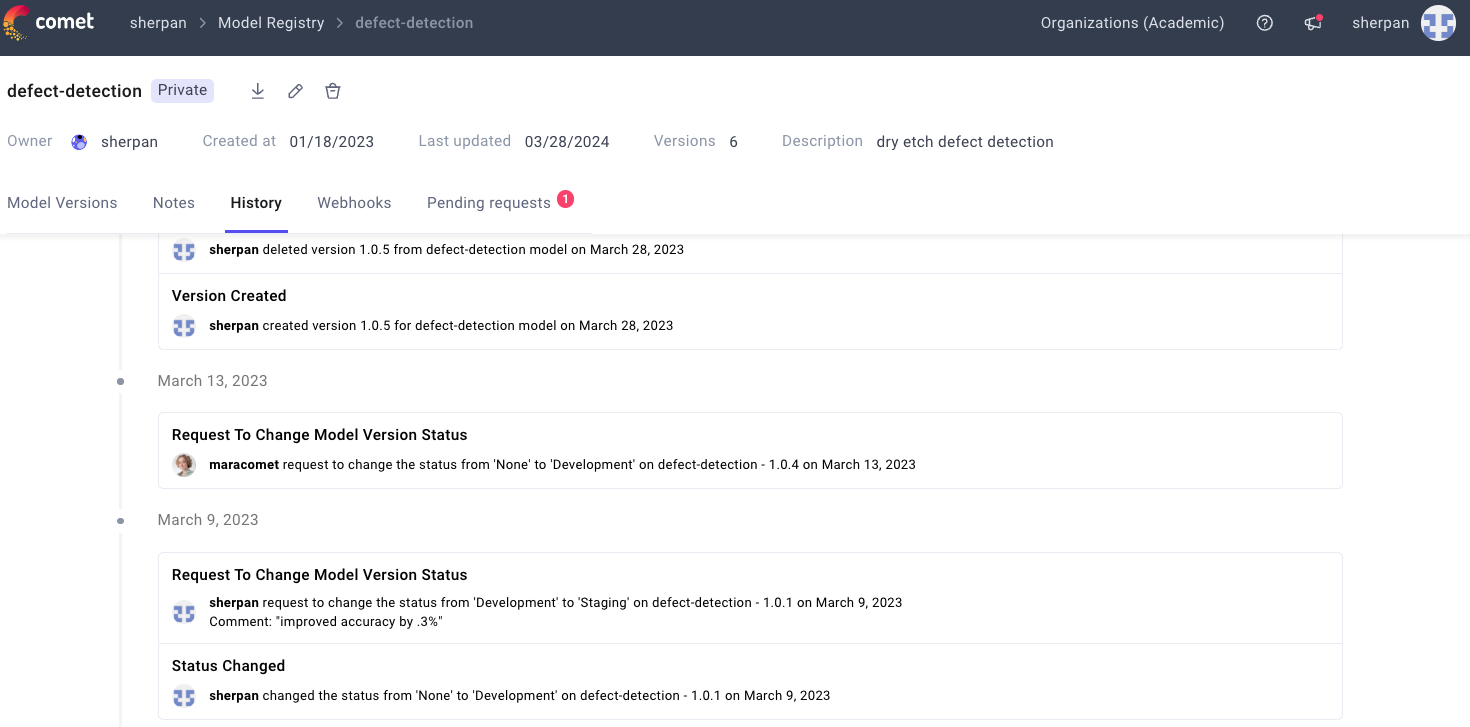

But for businesses trying to gain significant value from their ML projects, being able to build performant models is only one piece of the puzzle. In this excellent blog post from Felipe Almeida, ML Engineer at Nubank, Almeida explores some of the core concepts of “ML Model Monitoring”—or, in other words, the phase of the ML lifecycle in which ML teams track and evaluate a model’s performance once it’s in production and making predictions on real-world data.

If an ML system’s value is to automate or even strongly recommend a business decision, then practitioners and teams need ways to ensure that these decisions are being made based on models that are working as intended. The essential nature of this dynamic within an ML project is why we decided to build model monitoring capabilities at Comet, and is the core concern of Almeida’s blog post.

In the post, Almeida offers 9 tips for other ML teams implementing monitoring solutions, ranging from the problems of relying on average values, to the need to split monitoring data into subsamples, to several tips intended specifically for production models that operate in real-time.

Read Almeida’s full blog post here.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

An Introduction to AI Story Generation

In this new deep dive in The Gradient, Georgia Tech Professor Dr. Mark Riedl explores the history, evolution, challenges, and advancements in a specific creative pursuit of AI systems—story generation. More specifically Riedl defines automated story generation as “the use of an intelligent system to produce a fictional story from a minimal set of inputs.”

From this baseline definition, Riedl leaves no stone unturned—he explores the core aspects of narrative psychology, non-AI approaches to story generation, and the evolution of both ML and neural network-based approaches to story generation that we encounter today (recurrent neural nets, transformers, etc.).

Overall, this is an incredible primer on automated story generation that provides plenty of history, context, and nuanced discussion about the nature and inner workings of these systems.

Read Dr. Riedl’s full deep dive here.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

AI research still has a reproducibility problem

Using a guiding example from the world of multi-agent reinforcement learning (MARL) tasks, Kyle Wiggers of VentureBeat explores the numerous problems that remain with a foundational scientific process that machine learning continues to struggle with: reproducibility.

Wiggers notes that today’s ML studies rely heavily on benchmark test data in lieu of providing source code. The problems with this approach are multifaceted, but one glaring issue is that benchmark performance—for a number of reasons—isn’t always an accurate indicator of how a given model will work in the real world. And without source code included in the studies, other ML engineers and researchers have no way to reproduce and validate a given study, making standard scientific principles around replication difficult to execute.

The specifics of what exactly constitutes reproducibility in machine learning is still up for debate. But researchers at McGill University, the City University of New York, Harvard, and Stanford recently offered some perspective in a letter published in Nature. In the letter, which responded to Google’s research centered on using ML for breast cancer diagnostics, the researchers outlined several core expectations for reproducibility:

- Descriptions of model development, data processing, and data pipelines

- Open-sourced code and training sets—or at least model predictions and labels

- And a disclosure of variables used in dataset augmentation